Kubernetes makes it easy to scale applications. But when it comes to CPU resource management, a poorly tuned cluster can quickly become unstable or inefficient. For network engineers, setting CPU requests and limits correctly, and understanding the deeper implications, is essential for keeping workloads efficient, costs predictable, and noisy neighbors in check.

What Are Kubernetes CPU Limits?

CPU resource management in Kubernetes is controlled using requests and limits. These determine how the scheduler places pods and how the kubelet enforces CPU usage during runtime.

CPU Request vs CPU Limit

Let’s break this down:

| Concept | CPU Request | CPU Limit |

| Definition | Minimum CPU a pod is guaranteed to get | Maximum CPU a pod can use before being throttled |

| Scheduling | Used by the scheduler for pod placement | Not used by scheduler |

| Runtime Behavior | Defines baseline CPU availability | Enforced using CFS quota and may cause throttling |

| Common Issues | Under-requesting can delay pod scheduling | Over-limiting can lead to CPU throttling |

Why Setting CPU Limits Is Important

When CPU resources aren’t managed properly, it creates challenges for stability and performance. Here are some pointers on how to counteract such challenges:

- Prevent noisy neighbor issues: Ensure fair CPU access across pods sharing a node.

- Avoid CPU starvation: Prevent workloads from over-consuming CPU and triggering service disruption.

- Ensure predictable performance: Reduce variability, especially for latency-sensitive services.

- Support compliance and budgeting: Track resource consumption and plan node capacity allocations in managed clusters.

How to Set CPU Limits in Kubernetes

You define CPU requests and limits using the resources field in your PodSpec:

resources:

requests:

cpu: "500m"

limits:

cpu: "1"- Requests = 0.5 CPU

- Limits = 1 CPU

This allows CPU bursting when available but ensures a guaranteed baseline. If requests equal limits, the container becomes tightly bound, which may help with exclusive CPU assignment via the CPU Manager’s static policy.

Resource Units and Measurement in Kubernetes

In Kubernetes, compute resources such as CPU and memory are quantified using standardized units to ensure consistent scheduling and enforcement across clusters.

CPU resources are measured in cores, where one CPU typically represents one physical or virtual core, and fractional values (such as 500m for 0.5 CPU) allow for precise allocation.

Memory is measured in bytes, with support for common suffixes such as Mi (mebibytes) or Gi (gibibytes) for clarity. These units are specified in the pod or container configuration under the resources field, allowing engineers to define requests (minimum guaranteed resources) and limits (maximum allowed usage).

By accurately measuring and specifying resource units, Kubernetes can efficiently schedule workloads, enforce boundaries, and optimize resource utilization across nodes.

Best Practices for Setting CPU Limits

Optimizing CPU limits in Kubernetes isn’t about saving resources – it’s about keeping your applications fast, responsive, and reliable. Here’s how to get it right:

1. Start with real usage

Set your CPU requests based on how your app typically behaves. This ensures it gets scheduled reliably and performs consistently under normal conditions.

2. Don’t go too low

Setting CPU limits too tightly can backfire. It may throttle your app unnecessarily, especially if it’s multi-threaded or sensitive to latency. That means slower response times and unhappy users.

3. Consider skipping limits

In many production setups, it’s actually better to omit CPU limits unless you need strict isolation or are bound by compliance rules. Let your app breathe!

4. Monitor and adjust

Use observability tools to track real-world usage. Then, tune your requests over time instead of locking things down too early.

5. Plan for multitenant environments

Running a shared cluster? Apply namespace-level policies and regularly review your settings. This helps avoid resource hogging and ensures a fair distribution of resources for everyone.

Advanced Node and Policy Considerations

Kubernetes offers node-level configurations that impact CPU behavior beyond basic pod settings:

CPU manager policies

- The static policy allows exclusive CPU cores for guaranteed pods.

- Ideal for low-latency or real-time workloads needing core exclusivity.

Node allocatable and reserved resources

- Nodes reserve CPU for system processes (system-reserved and kube-reserved).

- Engineers can query node allocatable values via the Node Status View to verify the usable CPU.

Scheduler extenders and affinities

- Use scheduler extenders and service-to-node associations for intelligent placement.

- Prevent cross-socket memory traffic and context switching by using NUMA-aware scheduling.

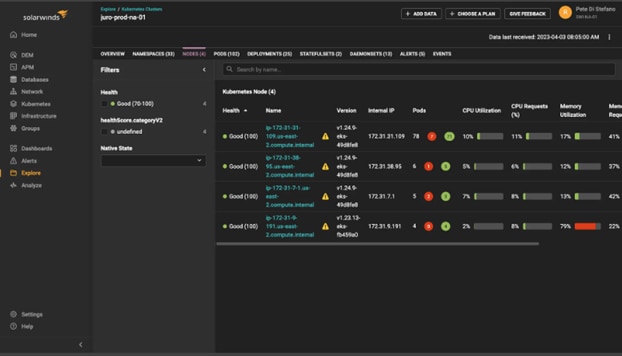

Node-level monitoring

- Use historical node-level event data to detect noisy neighbors, underutilization, or resource leaks.

- Extend visibility with tools such as SolarWinds® Observability.

CPU Throttling and Its Implications

CPU throttling happens when a container exceeds its defined CPU limit, triggering CFS quota enforcement. Over time, this causes the following issues:

| Mistake | Impact |

| Limits = Requests | No bursting allowed; may block scheduling |

| No Limits | Risk of CPU starvation, cache misses, and noisy neighbors |

| Low Limits | Risk of CPU throttling, threading issues, and latency spikes |

| Ignoring Autoscaling | Risk of conflicts with HPA; may lead to pod thrashing |

| Overprovisioning without Node Awareness | Risk of exhausting node allocatable CPU; block new pod scheduling |

Namespace Resource Quotas and Limit Ranges

To enforce CPU policies at scale, use namespace-level constraints:

| Object | Purpose |

| ResourceQuota | Sets aggregate limits on CPU/memory for all pods in a namespace |

| LimitRange | Defines default CPU requests/limits, and min/max values per pod |

| Quality of Service (QoS) | Kubernetes uses QoS classes (Guaranteed, Burstable, BestEffort) based on request/limit configuration |

Use these to:

- Enforce minimum and maximum CPU constraints.

- Prevent excessive resource consumption.

- Improve fairness in multi-user or managed clusters.

How to Monitor and Optimize CPU Usage

Monitoring CPU metrics is crucial for detecting throttling, identifying misconfigurations, and enhancing performance.

Key tools

- kubectl top: Live usage data per pod/node

- Prometheus + Grafana: Visualize trends and compare requests vs actual usage

- SolarWinds Observability: Deep Kubernetes insights with pod-, node-, and container-level visibility

© 2025 SolarWinds Worldwide, LLC. All rights reserved.

Track these metrics:

| Metric | Why It Matters |

| container.cpu.usage | Shows real-time CPU consumption |

| container.cpu.throttled | Detects limits causing performance degradation |

| cpu saturation or run queue length | Indicates overloaded nodes |

| node allocatable vs usage | Highlights overprovisioning or underutilized resources |

Conclusion

As clusters scale and multitenant deployments become standard, tuning Kubernetes CPU settings goes far beyond YAML configuration. Network engineers must understand CFS quotas, CPU Manager policies, node allocatable resources, and namespace-level quotas to build truly performant and resilient systems.

Gain full visibility into CPU throttling, pod scheduling behavior, and node-level resource usage with SolarWinds Observability for Kubernetes.